Thank you!

We have received your request. We will reach out to you soon!!

Improving Sequence Tagging for Vietnamese Text Using Transformer-based Neural Models

Content

Authors: Viet Bui The, Oanh Tran Thi, Phuong Le-Hong

Comments: Accepted at the Conference PACLIC 2020

Subjects: Computation and Language (cs.CL)

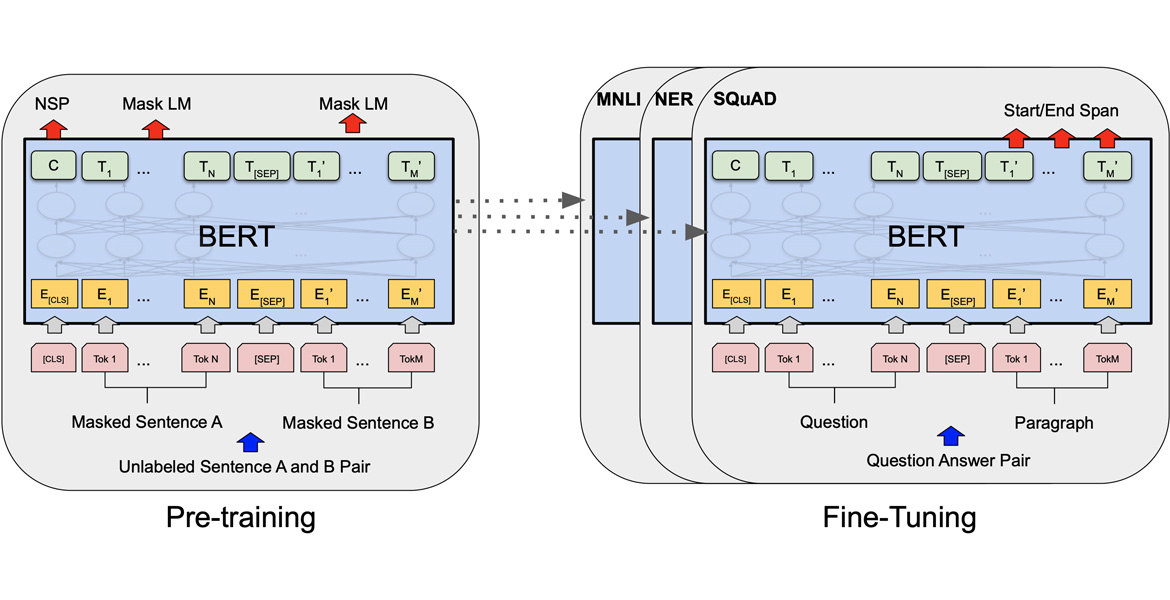

Abstract: This paper describes our study on using mutilingual BERT embeddings and some new neural models for improving sequence tagging tasks for the Vietnamese language. We propose new model architectures and evaluate them extensively on two named entity recognition datasets of VLSP 2016 and VLSP 2018, and on two part-of-speech tagging datasets of VLSP 2010 and VLSP 2013. Our proposed models outperform existing methods and achieve new state-of-the-art results. In particular, we have pushed the accuracy of part-of-speech tagging to 95.40% on the VLSP 2010 corpus, to 96.77% on the VLSP 2013 corpus; and the F1 score of named entity recognition to 94.07% on the VLSP 2016 corpus, to 90.31% on the VLSP 2018 corpus. Our code and pre-trained models viBERT and vELECTRA are released as open source to facilitate adoption and further research

Published: 6/29/2020

See more: https://arxiv.org/abs/2006.15994

Do you need a workthrough of our platform? Let us know

Do you need a workthrough of our platform? Let us know

Acknowledging the potential of AI Agents, a survey conducted by Khmel indicates that 82% of businesses plan to deploy these systems into their organizations in the next three years (2024). By the end of 2025, there will be approximately 50 to 100 billion AI agents integrated into various types of businesses, providing human workers with … Continued

Content Authors: Tran Duc Dung; Genci Capi Comments: Accepted at the Conference PACLIC 2020 Abstract: Mapping is a crucial task for robot navigation. Especially, in order to develop a fully autonomous robot that can interact well with human, the map is not only required to contain geometry but also the semantic contents. Building a detailed map of the … Continued

Content Authors: Dinh Viet Sang; Nguyen Duc Minh Abstract: Semantic segmentation from aerial imagery is one of the most essential tasks in the field of remote sensing with various potential applications ranging from map creation to intelligence service. One of the most challenging factors of these tasks is the very heterogeneous appearance of artificial objects like buildings, … Continued

Content Authors: Tran Duc Dung; Delowar Hossain; Shin-ichiro Kaneko; Genci Kapi Abstract: Robot localization is an important task for mobile robot navigation. There are many methods focused on this issue. Some methods are implemented in indoor and outdoor environments. However, robot localization in textureless environments is still a challenging task. This is because in these environments, the scene … Continued

Content Authors: Delowar Hossain, Sivapong Nilwong, Duc Dung Tran, Genci Capi Abstract: Many objects in household and industrial environments are commonly found partially occluded. In this paper, we address the problem of recognizing objects for use in partially occluded object recognition. To enable the use of more expensive features and classifiers, a region proposal network (RPN) which … Continued

Content Authors: Luong Chi Tho, Tran Thi Oanh Abstract: This paper presents the task of deeply analyzing user requests: the situation in ordering bots where users input an utterance, the bots would hopefully extract its full product descriptions and then parse them to recognize each product information (PI). This information is useful to help bots better understand … Continued

Content Authors: Tran The Trung, Nguyen Minh Hai, Ha Minh Hoang, Hoang Thai Dinh, Eryk Dutkiewicz, Diep N. Nguyen Abstract: The minimum dominating set problem (MDSP) aims to construct the minimum-size subset D⊂VD⊂V of a graph G=(V,E)G=(V,E) such that every vertex has at least one neighbor in D. The problem is proved to be NP-hard. In a recent industrial application, we encountered a more general variant … Continued

Content Authors: Oanh Tran, Tu Pham, Vu Dang, Bang Nguyen Abstract: This paper introduces a large-scale human-labeled dataset for the Vietnamese POS tagging task on conversational texts. To this end, wepropose a new tagging scheme (with 36 POS tags) consisting of exclusive tags for special phenomena of conversational words, developthe annotation guideline and manually annotate 16.310K sentences … Continued

Content Authors: Dang Hoang Vu, Van Huy Nguyen, Phuong Le-Hong Abstract: Hybrid models of speech recognition combine a neural acoustic model with a language model, which rescores the output of the acoustic model to find the most linguistically likely transcript. Consequently the language model is of key importance in both open and domain specific speech recognition and … Continued

Content Authors: Luong Chi Tho, Le Hong Phuong Abstract: This work investigates the task-oriented dialogue problem in mixed-domain settings. We study the effect of alternating between different domains in sequences of dialogue turns using two related state-of-the-art dialogue systems. We first show that a specialized state tracking component in multiple domains plays an important role and gives … Continued

Content Authors: The-Tuyen Nguyen; Xuan-Luong Vu; Phuong Le-Hong Abstract: In this paper, we report our work on building linguistic resources for Vietnamese social network text analysis in multiple domains. We first describe our annotation methodology including guidelines development, annotation softwares and quality assurance. We then present results of the first pilot phase of the project. Finally, we outline some … Continued

Content Authors: Minh Hai Nguyen, Minh Hoàng Hà, Diep N. Nguyen & The Trung Tran Abstract: The well-known minimum dominating set problem (MDSP) aims to construct the minimum-size subset of vertices in a graph such that every other vertex has at least one neighbor in the subset. In this article, we study a general version of the … Continued

Acknowledging the potential of AI Agents, a survey conducted by Khmel indicates that 82% of businesses plan to deploy these systems into their organizations in the next three years (2024). By the end of 2025, there will be approximately 50 to 100 billion AI agents integrated into various types of businesses, providing human workers with … Continued

Content Authors: Tran Duc Dung; Genci Capi Comments: Accepted at the Conference PACLIC 2020 Abstract: Mapping is a crucial task for robot navigation. Especially, in order to develop a fully autonomous robot that can interact well with human, the map is not only required to contain geometry but also the semantic contents. Building a detailed map of the … Continued

Content Authors: Dinh Viet Sang; Nguyen Duc Minh Abstract: Semantic segmentation from aerial imagery is one of the most essential tasks in the field of remote sensing with various potential applications ranging from map creation to intelligence service. One of the most challenging factors of these tasks is the very heterogeneous appearance of artificial objects like buildings, … Continued

Content Authors: Tran Duc Dung; Delowar Hossain; Shin-ichiro Kaneko; Genci Kapi Abstract: Robot localization is an important task for mobile robot navigation. There are many methods focused on this issue. Some methods are implemented in indoor and outdoor environments. However, robot localization in textureless environments is still a challenging task. This is because in these environments, the scene … Continued

Content Authors: Delowar Hossain, Sivapong Nilwong, Duc Dung Tran, Genci Capi Abstract: Many objects in household and industrial environments are commonly found partially occluded. In this paper, we address the problem of recognizing objects for use in partially occluded object recognition. To enable the use of more expensive features and classifiers, a region proposal network (RPN) which … Continued

Content Authors: Luong Chi Tho, Tran Thi Oanh Abstract: This paper presents the task of deeply analyzing user requests: the situation in ordering bots where users input an utterance, the bots would hopefully extract its full product descriptions and then parse them to recognize each product information (PI). This information is useful to help bots better understand … Continued

Content Authors: Tran The Trung, Nguyen Minh Hai, Ha Minh Hoang, Hoang Thai Dinh, Eryk Dutkiewicz, Diep N. Nguyen Abstract: The minimum dominating set problem (MDSP) aims to construct the minimum-size subset D⊂VD⊂V of a graph G=(V,E)G=(V,E) such that every vertex has at least one neighbor in D. The problem is proved to be NP-hard. In a recent industrial application, we encountered a more general variant … Continued

Content Authors: Oanh Tran, Tu Pham, Vu Dang, Bang Nguyen Abstract: This paper introduces a large-scale human-labeled dataset for the Vietnamese POS tagging task on conversational texts. To this end, wepropose a new tagging scheme (with 36 POS tags) consisting of exclusive tags for special phenomena of conversational words, developthe annotation guideline and manually annotate 16.310K sentences … Continued

Content Authors: Dang Hoang Vu, Van Huy Nguyen, Phuong Le-Hong Abstract: Hybrid models of speech recognition combine a neural acoustic model with a language model, which rescores the output of the acoustic model to find the most linguistically likely transcript. Consequently the language model is of key importance in both open and domain specific speech recognition and … Continued

Content Authors: Luong Chi Tho, Le Hong Phuong Abstract: This work investigates the task-oriented dialogue problem in mixed-domain settings. We study the effect of alternating between different domains in sequences of dialogue turns using two related state-of-the-art dialogue systems. We first show that a specialized state tracking component in multiple domains plays an important role and gives … Continued

Content Authors: The-Tuyen Nguyen; Xuan-Luong Vu; Phuong Le-Hong Abstract: In this paper, we report our work on building linguistic resources for Vietnamese social network text analysis in multiple domains. We first describe our annotation methodology including guidelines development, annotation softwares and quality assurance. We then present results of the first pilot phase of the project. Finally, we outline some … Continued

Content Authors: Minh Hai Nguyen, Minh Hoàng Hà, Diep N. Nguyen & The Trung Tran Abstract: The well-known minimum dominating set problem (MDSP) aims to construct the minimum-size subset of vertices in a graph such that every other vertex has at least one neighbor in the subset. In this article, we study a general version of the … Continued

Get ahead with AI-powered technology updates!

Subscribe now to our newsletter for exclusive insights, expert analysis, and cutting-edge developments delivered straight to your inbox!