As a solution integrating the “intelligent brain” of Large Language Models (LLMs), LLM Agent can autonomously plan, divide tasks, and interact with various systems. This allows LLM Agents to optimize business operations, from customer service to data management, enhancing efficiency in multi-step processes. Join FPT.AI to explore everything about LLM Agents, from concepts and mechanisms to pros and cons, in this article.

LLM Agent Overview

Large Language Models (LLMs) are systems that learn from complex language patterns in training data to analyze, comprehend natural language, and deliver contextually accurate responses.

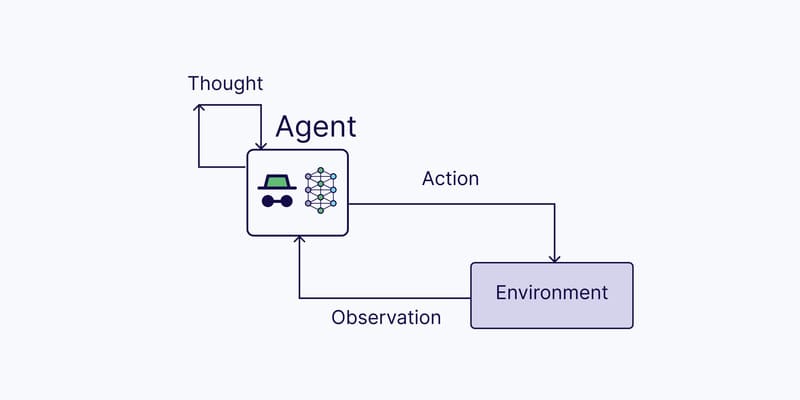

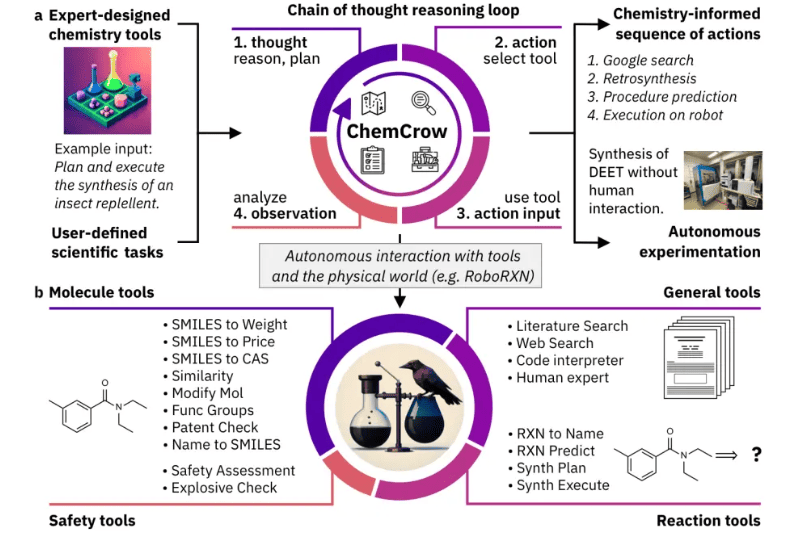

LLM Agent is an advanced version of these models, surpassing basic response capabilities. It can automatically analyze requests, understand context, and develop step-by-step plans for handling them, including information gathering, data processing, and appropriate actions.

Throughout this process, LLM Agents can leverage tools like web search, APIs, and databases to enhance performance and meet complex user demands.

>>> Read more about: What is Agentic RAG? Difference between Agentic RAG and RAG

Key Differences Between LLM and LLM Agent

To better understand the fundamental differences between LLMs and LLM Agents, consider how these technologies handle the question:

“What are the common legal challenges companies face with new data privacy laws, and how have courts handled them?”

| Criteria | Basic LLM | LLM Agent |

| Capability | Uses RAG (Retrieval-Augmented Generation) to access and provide information on the legal implications of contract breaches in Vietnam. | Goes beyond information retrieval to understand new regulations, analyze their impact, and examine court rulings by breaking the question into subtasks:

|

| Limitation | Focused solely on information retrieval; lacks the ability to connect laws to real business scenarios or deeply analyze court decisions. | Requires a detailed plan, reliable data storage systems to track progress, and access to necessary support tools for effective operation. |

>>> Explore: What Are AI Agents? The Difference Between AI Agents and AI Chatbots

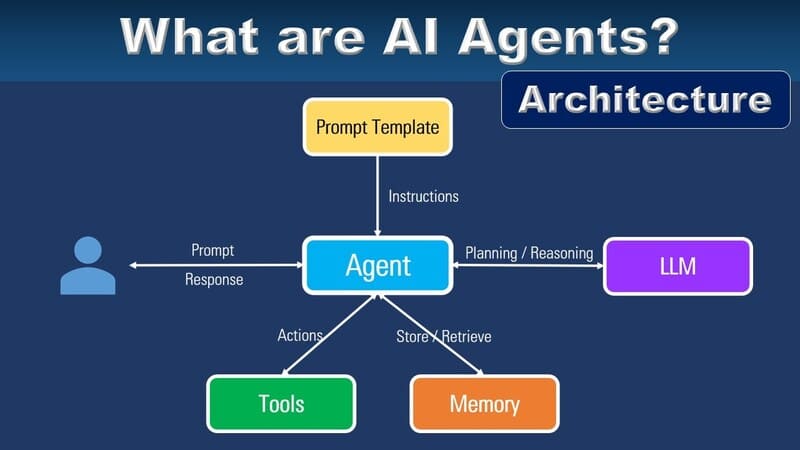

Core Components of an LLM Agent Framework

A complete LLM Agent system comprises several synchronized components. These enable the system to not only provide answers but also propose contextually relevant strategies and detailed action steps:

- Agent/Brain: The starting point is providing a clear prompt, akin to giving directions to a driver before a trip. Agents can also be customized with unique personas tailored for specific tasks, optimizing performance.

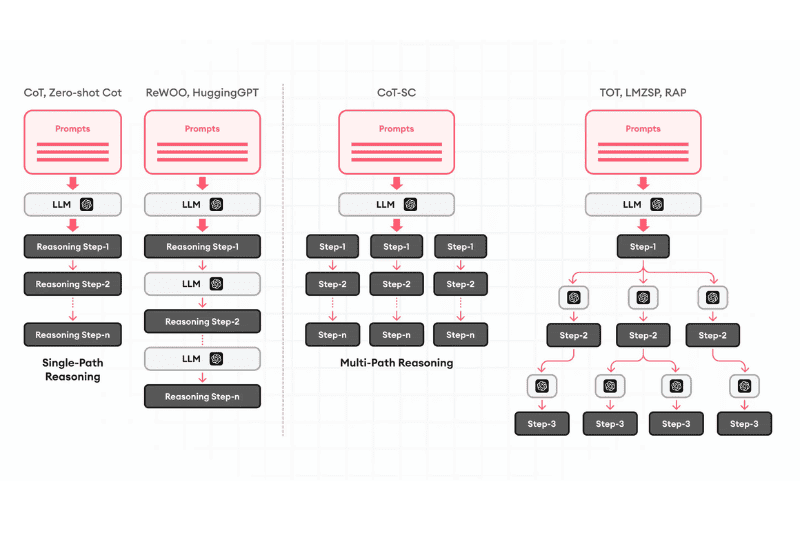

- Planning: Non-feedback planning breaks tasks into smaller steps independently, using methods like Chain of Thought (CoT) or Tree of Thought (ToT) whereas Feedback planning Adapts plans based on real-time actions and observations, employing techniques like ReAct and Reflexion to improve task execution dynamically.

- Memory: Short-term memory stores essential details for current tasks while long-term memory retains historical interaction data for pattern recognition and improved decision-making.

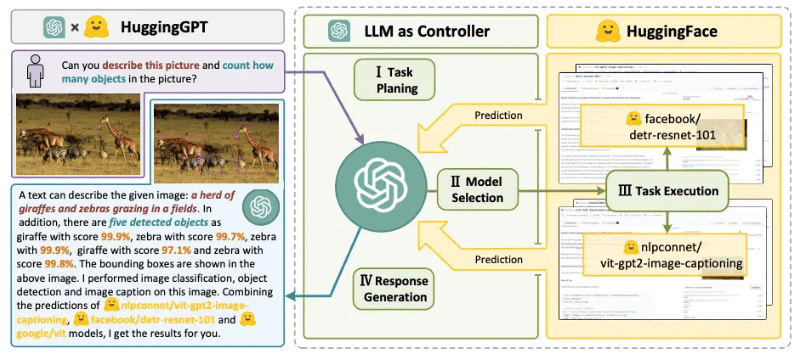

- Tool Use: LLM Agents utilize tools like MRKL, Toolformer, HuggingGPT, and API-Bank to connect with external systems and perform tasks such as information retrieval, coding, or market analysis.

>>> EXPLORE: How to build an AI Agent and train it successfully?

Practical Applications of LLM Agents

LLM Agents are widely used across various fields due to their natural language processing capabilities and ability to handle complex tasks:

- Healthcare: Develops treatment plans based on patient symptoms and history.

- Legal: Selects and summarizes legal documents to support case resolution.

- Chemistry: Predicts and performs chemical reactions, accelerating research.

- Scientific Research: Analyzes experimental data to provide insights or guide future studies.

- Pharmaceuticals: Converts molecular formulas into practical data for safety and cost analysis.

- Programming: Translates code between languages and identifies critical data patterns.

>>> EXPLORE: AI Agents at Work – Foundation for Productivity Breakthrough

Challenges of applying LLM Agents in reality

Despite their potential, LLM Agents face certain limitations:

- Context Limitations: Can only track limited information at a time, risking missed details.

- Long-term Planning: Struggle with extended tasks requiring adaptability.

- Inconsistent Results: Errors may arise from natural language-based interactions.

- Role-Specific Adaptation: Customizing agents for specialized roles remains a challenge.

- Prompt Dependence: Requires carefully crafted prompts for accuracy.

- Knowledge Management: Ensuring accurate, unbiased, and up-to-date information is crucial.

- Cost and Performance: Operating LLM Agents can be resource-intensive, requiring effective management.

In short, LLM Agents unlock significant potential for optimizing automation and enhancing human-machine interaction. However, challenges such as long-term planning, role-specific adaptation, and reliability of responses must be addressed. Follow FPT.AI to stay updated on the latest advancements and revolutionary applications of LLM Agents.

>>> Read more about: