In the field of Generative AI, each Generative Model offers unique approaches and applications. From GANs, VAEs, and Transformers to Diffusion Models and Autoregressive Models, these models not only reflect technological advancements but also unlock groundbreaking solutions across various domains. This article delves into the standout Generative Models, their mechanisms, and potential applications.

Generative Adversarial Networks (GANs)

What Are Generative Adversarial Networks?

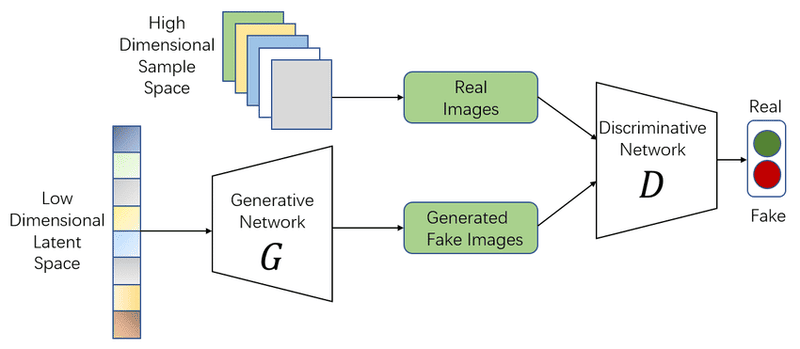

Introduced by Ian Goodfellow and colleagues in 2014, Generative Adversarial Networks (GANs) are among the most popular models in Generative AI. GANs consist of two adversarial neural networks:

- Generator: Attempts to create fake data.

- Discriminator: Learns to distinguish between real and fake data.

The adversarial process continues until both networks reach equilibrium, where the data generated by the Generator is indistinguishable from real data. For instance, in generating human face images, the Generator takes a random vector as input to produce a synthetic face image. The Discriminator compares this image to real face images in the dataset and determines whether it is real or fake. Based on this feedback, the Generator improves its output, while the Discriminator sharpens its detection, leading to highly realistic synthetic images.

Training Process of GANs

The training process of GANs involves:

- Initialization: Randomly initializing weights for the Generator and Discriminator.

- Training Loop: The Generator creates fake data, and the Discriminator assesses it as real or fake, assigning a probability score.

- Backpropagation: Errors are used to update the Discriminator’s weights, which in turn guide adjustments to the Generator.

- Sampling: The trained Generator generates new data based on the learned distribution.

GANs can model complex, multimodal data distributions, producing diverse and high-quality samples. However, challenges like mode collapse (the Generator producing repetitive samples) may arise. Variants like StyleGAN, developed by NVIDIA, address these issues, enabling the creation of hyper-realistic human portraits, as seen in the 2019 project “This Person Does Not Exist.”

Potential Applications of GANs

GANs have become a pivotal tool across industries due to their ability to generate high-quality data and learn complex distributions. Their applications include:

- Image and Video Generation: Creating realistic images, videos, and deepfakes.

- Image Style Transfer: Converting day-to-night photos or cartoonizing images.

- Data Augmentation and Synthesis: Enhancing supervised learning models.

- Image Captioning and Synthesis: Generating images from text or auto-captioning.

- 3D Design: Crafting new 3D models.

- Voice and Audio Conversion: Transforming voices or synthesizing sounds.

- Game Development: Designing characters and game content.

- Artistic Creation: Assisting in music composition, literature, and visual arts.

- Security and Privacy: Generating fake data to protect sensitive information and detect deepfakes.

- Scientific Simulation: Predicting and simulating scientific research scenarios.

The effectiveness of GANs depends on input data quality, model architecture, distribution complexity, and training parameters. These factors determine the quality and versatility of the generated outputs.

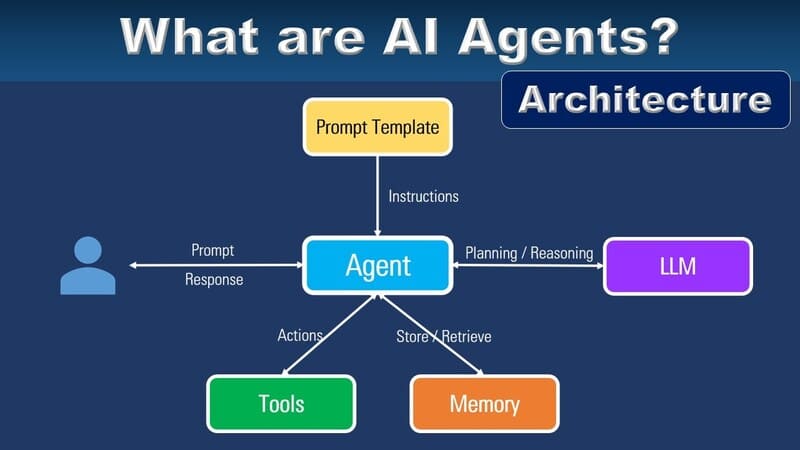

>>> READ NOW: What Are AI Agents? The Difference Between AI Agents and AI Chatbots

Variational Autoencoders (VAEs)

What Are Variational Autoencoders?

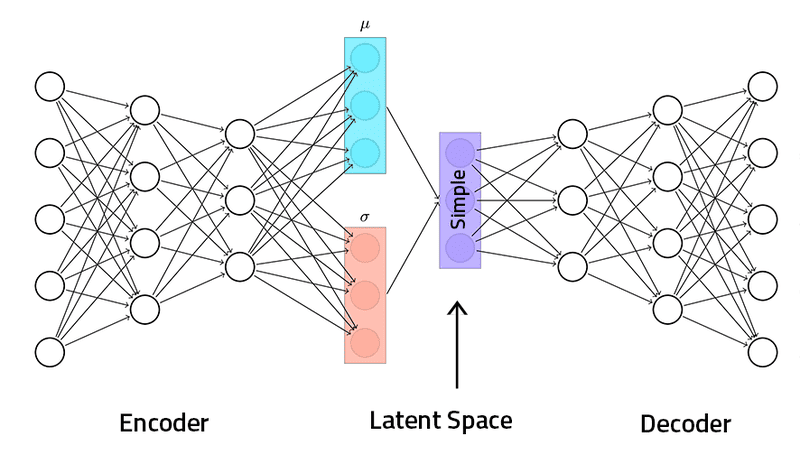

Compared to Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs) provide more controlled outputs. As an extension of Autoencoders, VAEs not only reconstruct data but also represent it in a latent space as a probability distribution. The core idea of VAEs is to encode input data into a Gaussian distribution with parameters for mean and variance.

VAEs operate using two main networks:

- Encoder: Encodes input data into the parameters of a probability distribution in the latent space.

- Decoder: Samples from this distribution to reconstruct the input data.

The primary goal of VAEs is to minimize reconstruction loss and ensure continuous and complete representations in the latent space, making them particularly effective for data generation and dimensionality reduction.

Training Process of VAEs

The training of a VAE involves:

- Encoding: Input data is compressed into a latent space assumed to follow a Gaussian distribution, represented by mean (μ) and standard deviation (σ). Encoders, built using deep neural networks, learn to compress input data into latent representations.

- Sampling: Instead of directly sampling from the probability distribution, VAEs use the Reparameterization Trick to ensure differentiability during backpropagation. This is done using the formula z=μ+σ⋅ϵ, where ϵ is noise sampled from a standard Gaussian distribution.

- Decoding: The Decoder uses sampled points in the latent space (z) to reconstruct new data. Its goal is to generate outputs that closely resemble the input data.

- Loss Calculation:

- Reconstruction Loss: Measures how accurately the Decoder reconstructs input data by comparing input and output.

- KL-Divergence Loss: Measures the difference between the learned Gaussian distribution and the standard Gaussian distribution in the latent space, ensuring the latent distribution remains close to the standard Gaussian.

- Backpropagation: Based on loss signals, the Encoder and Decoder weights are updated via backpropagation.

After training, VAEs can sample from the Gaussian distribution to generate new data with well-structured features, compress data into low-dimensional latent representations, or extract key features from input data. However, due to their probabilistic nature, VAEs may produce blurry, low-quality, or noisy samples.

To enhance output quality, methods like adversarial training and flow-based models have been proposed.

Diffusion Models

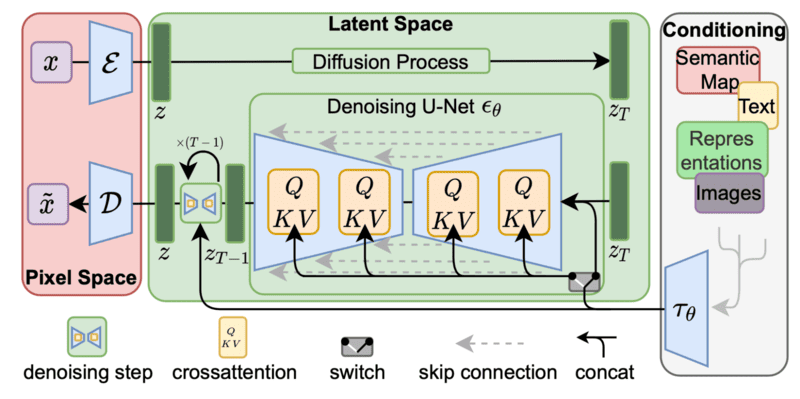

In the realm of image generation, Diffusion Models have emerged as a powerful alternative to Generative Adversarial Networks (GANs) due to their ability to capture complex relationships between pixels by adding and removing noise.

The diffusion process begins by incrementally adding noise to the original data over several iterations, increasing the noise level with each step. Subsequently, Diffusion Models learn to denoise the data step-by-step, eventually reconstructing the original data.

These models can also apply their learned denoising process to generate new data from various inputs. Over time, they become better at understanding data patterns and structures, optimizing their denoising capabilities to produce more accurate results.

Key Features of Diffusion Models including:

- Easier to train compared to other generative models like GANs and VAEs, using a contrastive loss function.

- Applicable to a wide range of generative tasks, including image synthesis, video prediction, and text generation.

For example, DALL-E, a neural network developed by OpenAI, leverages Diffusion Models to replicate pixels and create intricate, detailed images from text descriptions. Users simply input a desired textual description, and DALL-E generates a precise corresponding image.

Autoregressive Models

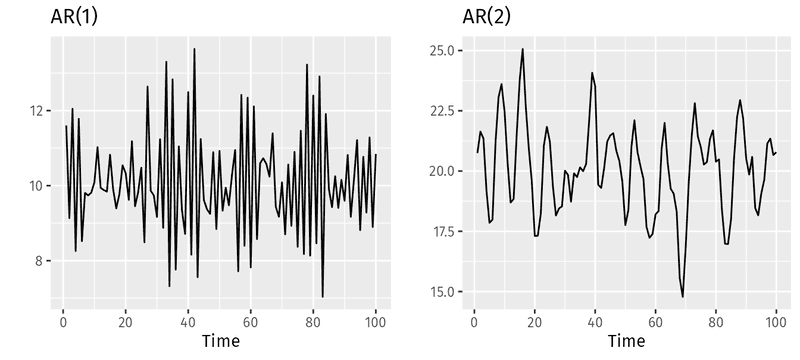

Autoregressive Models generate sequential data by predicting each element in a sequence based on previous elements using conditional probability models. These models are commonly used in Natural Language Processing (NLP) for tasks like text generation and translation.

Simply put, Autoregressive Models predict the next value in a sequence by analyzing preceding values. For example, in a stock price sequence, the model can forecast the next day’s price based on data from previous days.

Large Language Models (LLMs) like GPT (Generative Pre-trained Transformer) and BERT use autoregressive architectures to generate human-like responses. Trained on vast amounts of text data—ranging from articles and books to web content—these models can create new text that mimics the style and context of the original data.

In summary, Generative Models like GANs, VAEs, Transformers, Diffusion Models, and Autoregressive Models are reshaping how we create and process data. Each model offers unique approaches and applications, ranging from content creation and natural language processing to image development and scientific simulation. With immense potential, Generative AI not only supports automation but also drives innovation across various industries, becoming a vital tool for businesses and organizations to surpass creative and performance limits.